Foundation-Sec-8B: A Language Model for Cybersecurity - Introduction to the Cookbook

Cisco Foundation AI team released Foundation-Sec-8B, an open-weight language model tailored for cybersecurity, this April. Despite being lightweight enough to run on 1-2 GPUs, it matches or outperforms models 10 times larger on key cybersecurity benchmarks. The model gained significant attention, with ~15,000 downloads just on the launch day, and over 50. Additionally, we have introduced a reasoning model, now in preview, which will soon be generally available.

We’re excited to introduce the Cisco Foundation AI Cookbook—a comprehensive GitHub toolkit to help users explore and utilize our AI models. This practical guide covers key topics like security use cases, deployment options, and model fine-tuning techniques.

The cookbook offers a variety of materials for different use cases and applications, all built independently so you can choose any file that interests you and utilize our models. It is organized into four main categories: 1) Quickstarts, 2) Examples, 3) Adoptions and 4) Documents. The last category includes useful documents and links such as FAQs. Below, we’ll outline how to make the most of the first three categories to fully leverage our cybersecurity-focused models. Please note that this structure reflects the current version and may evolve as the cookbook is updated!

Access to Cisco Foundation AI Cookbook here: https://github.com/RobustIntelligence/foundation-ai-cookbook

This blog marks the start of a series showcasing how our products can address diverse cybersecurity use cases. In the coming weeks, we’ll share how to leverage AI for real-world cybersecurity challenges with sample code, demo UIs, and more.

Quickstart

Want to see how quickly you can start using our models? Check out our quickstart notebook, where you can download the models and run your first inference just by executing each cell sequentially. For faster performance, at least one GPU is recommended. If you’re working in a CPU-only environment, we suggest using quantized models, which we’ll cover in a later section.

Quickstart notebooks for other models, like the reasoning model, are also available. Since these models are currently in private preview, you can request early access by filling out this form.

Examples

Our models are designed for a variety of cybersecurity use cases, including but not limited to:

- SOC Acceleration: Automating alert triage, summarizing incidents, and drafting case notes.

- Proactive Threat Defense: Simulating attacks, mapping TTPs (Tactics, Techniques, and Procedures), and prioritizing vulnerabilities.

- Engineering Enablement: Reviewing code for security issues, validating configurations, and compiling compliance evidence.

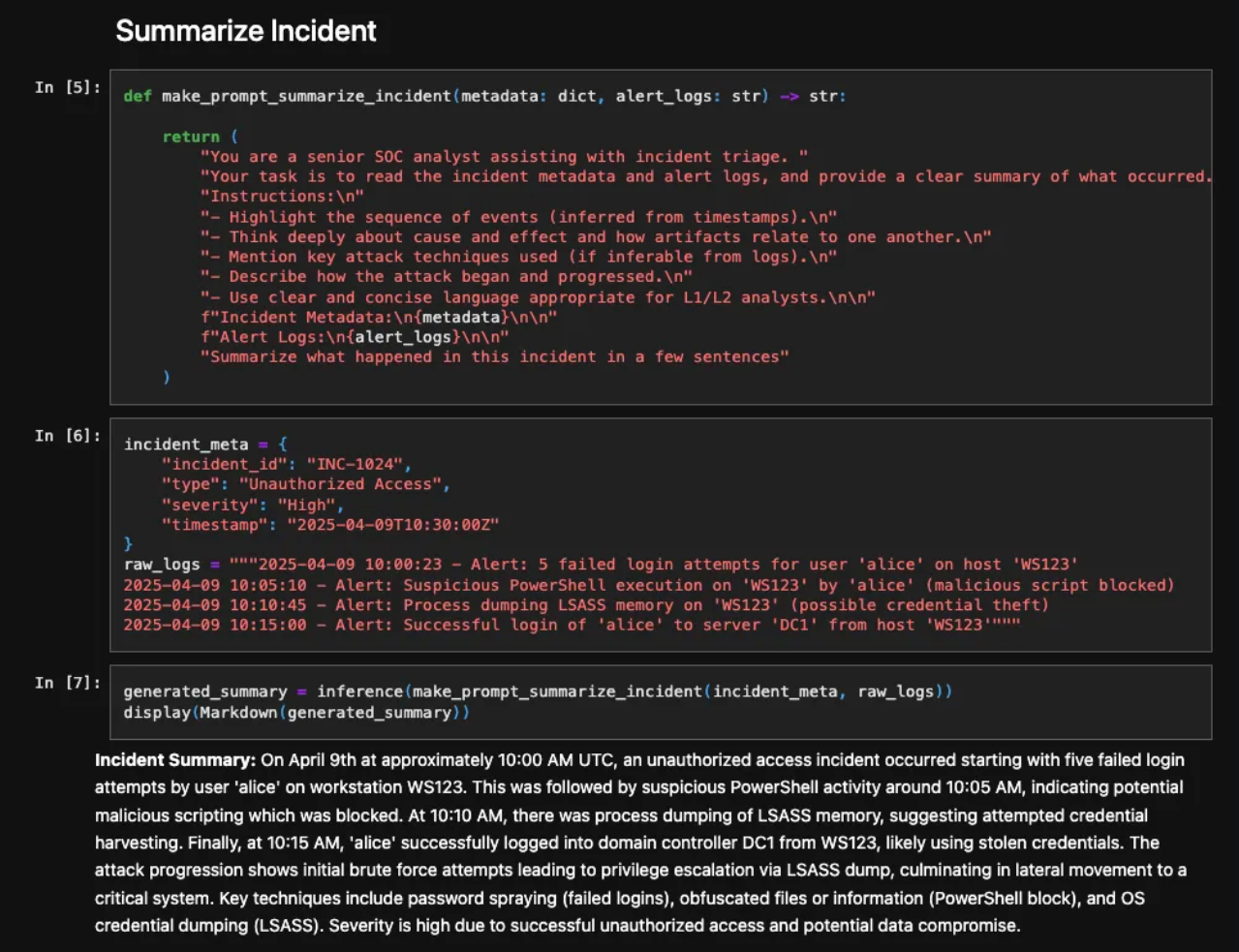

To support these use cases, we’ve created dedicated notebooks tailored to each scenario, using the most suitable model for the task. For instance, the attached screenshot is from this notebook, which demonstrates an end-to-end incident investigation use case. This notebook automates critical phases of incident investigation through a coordinated set of LLM-powered agents. These agents handle tasks like triaging, summarizing, planning next steps, and generating recommendations. The process culminates in detailed investigation reports for SOC teams, significantly reducing manual effort and streamlining operations.

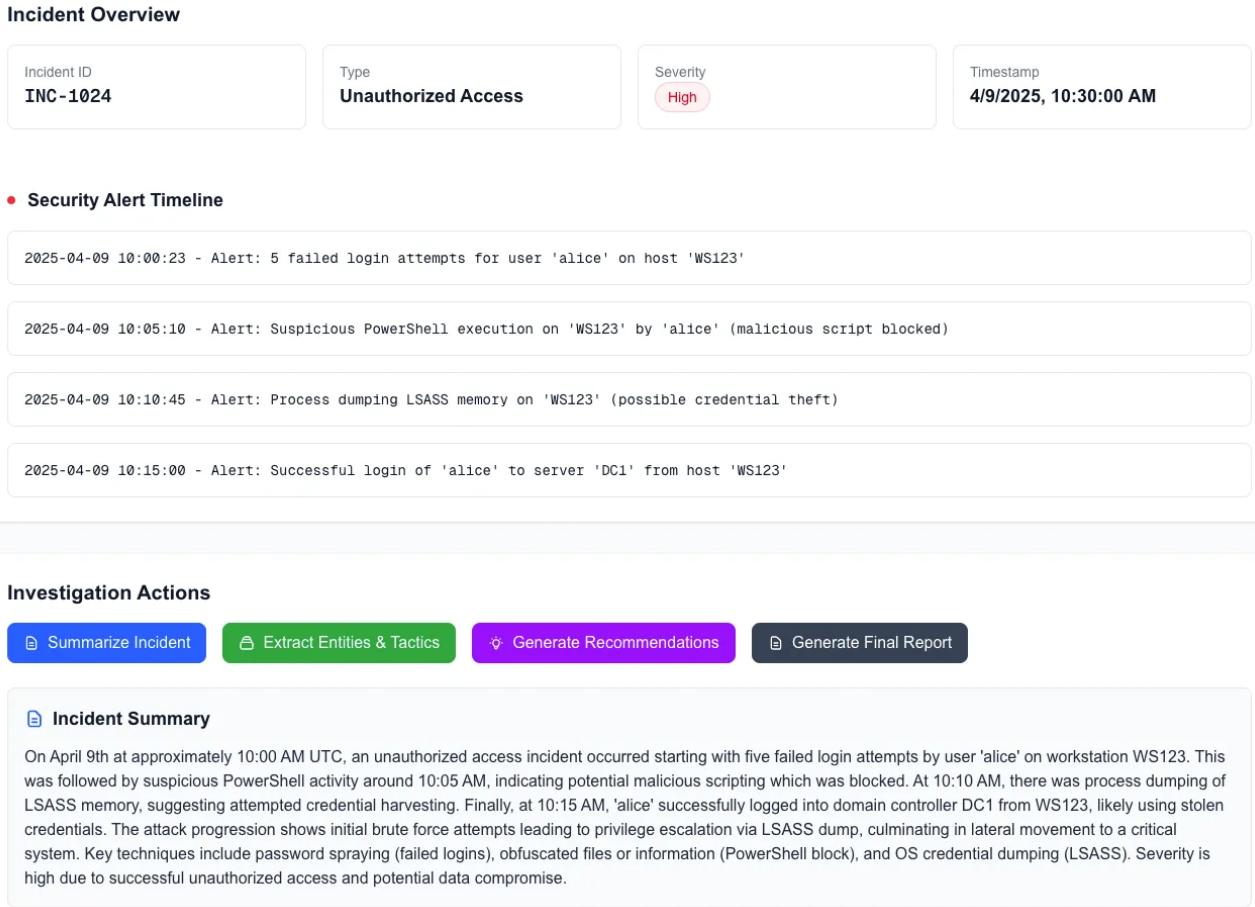

When using the models in production, you may want to build applications that utilize them behind the scenes. Attached is a sample UI capture showcasing how these codes can be seamlessly integrated into a daily use application. We will explore sample applications in upcoming blogs.

Adoptions

We go a step further to demonstrate how our models can be applied across various use cases and integrated into production environments.

Fine-tuning

Fine-tuning is a powerful way to adapt our models to specific tasks or domains, and the cookbook provides step-by-step guidance to help you get started. The fine-tuning notebooks use a cybersecurity description classification use case to demonstrate the entire process, including data preparation, model training, and performance evaluation.

We offer two fine-tuning approaches: one retains the original causal language model structure, while the other replaces the final layer with a classification head, allowing you to choose the method that best suits your needs.

Quantization

Running LLMs in resource-constrained environments can be challenging, but quantization offers a practical solution. Quantized models reduce memory and compute requirements by compressing the model weights, making it feasible to deploy them on CPU-only environments or edge devices. Our cookbook includes a dedicated section that explains how to run our quantized models without sacrificing significant accuracy. This enables organizations to achieve faster inference times and lower operational costs, all while maintaining robust model performance for cybersecurity tasks.

Deployments

Effective deployment is key to unlocking the full potential of AI models in real-world scenarios. The cookbook provides guidance on deploying the base model for inference across platforms such as Amazon SageMaker AI. Sample codes are included to demonstrate how to create inference endpoints with our models and run inferences against them. These endpoints serve as essential building blocks for running models on demand or integrating them into applications.

Last but not least, we welcome your contributions! If you have questions, suggestions (including ideas for missing use cases), or encounter any issues, feel free to open an issue, start a discussion, or submit a pull request on the cookbook GitHub repo.

Look out for upcoming posts on using the cookbook for specific cybersecurity tasks!